Text To Speech

Overview

Gameface provides text-to-speech as a feature, that makes games more accessible. It aims to help game developers integrate text-to-speech capabilities into game UI. Any text from a cohtml::View can now be verbally spoken to the end-user through the audio output. Text-to-speech is a functionality that makes text data be read to the audio output. It consists of two steps: synthesizing and playing audio. Synthesizing is a process of converting an arbitrary text into audio data (e.g. into WAVE audio stream). Playing audio is actually playing the generated audio stream to the audio output. This can happen either by directly interacting with the OS, or by going through the game engine audio system.

Here are some example use cases of what can be easily achieved with this feature:

- When a user hovers or focuses on an element, the element content gets spoken.

- Voicing messages from a game chat.

- Reading urgent notifications instantly to the audio output.

- Reading text from an input field while a user is typing.

The text-to-speech has three major components that are needed to work in combination to enable the feature. They are explained in more detail in the next sections, but we can list them here:

- Enable the Coherent TextToSpeech Plugin, working in UE5.1 and above, as it provides audio Synthesizer implementation for Unreal Engine;

- Load the SpeechAPI JS library inside the UI, as it controls the Synthesizer. At this point, you can already start manually playing speech requests via the Cohtml Speech API. You can automate this with the next step;

- Optionally, load the ARIA JS library and plugins inside the UI and use the provided JS observer classes to enable automatic speech on different user interactions (you can check the UI examples for the specific usages).

You can follow the Basic flow that describes how they work together.

JS SpeechAPI and ARIA JS libraries

To have a text-to-speech support in the UI, you need to load the above-described JS libraries. They can be found inside the CohtmlPlugin’s Resources\uiresources\javascript\text-to-speech. For ease of use, they will also be programmatically copied inside your project’s Content\uiresources\javascript\text-to-speech when you add new HUD or In-World UI from the Editor’s UI interface.

CPP Synthesizer

The FTextToSpeechSynthesizer class in an implementation of the ICohtmlSpeechSynthesizer interface and provides the functionality of synthesizing text to audio and playing that audio. The out of the box provided Synthesizer is using Epic’s UE5 TextToSpeech Plugin APIs to synthesize and play audio, which has support for all platforms and is internally using the Flite library to synthesize the audio.

The synthesizer is provided to the CohtmlPlugin via ICohtmlPlugin::Get().OnGetSpeechSynthesizer delegate as shown below:

ICohtmlSpeechSynthesizer* GetSpeechSynthesizer()

{

return new FTextToSpeechSynthesizer();

}

// This code needs to be executed before the creation of a Cohtml System to take effect

ICohtmlPlugin::Get().OnGetSpeechSynthesizer.BindStatic(&GetSpeechSynthesizer);

In much the same way, the default synthesizer can be swapped with a custom user implementation.

The FTextToSpeechSynthesizer is located inside the Coherent TextToSpeech Plugin.

Coherent TextToSpeech Plugin

Coherent TextToSpeech Plugin provides synthesizer implementation and has dependencies on Cohtml Plugin and UE5 TextToSpeech Plugin, the last of which is available on UE5, but it is reliably working as of UE5.1. On lower engine versions, the Coherent TextToSpeech Plugin will output a warning upon module initialization and won’t provide the synthesizer implementation.

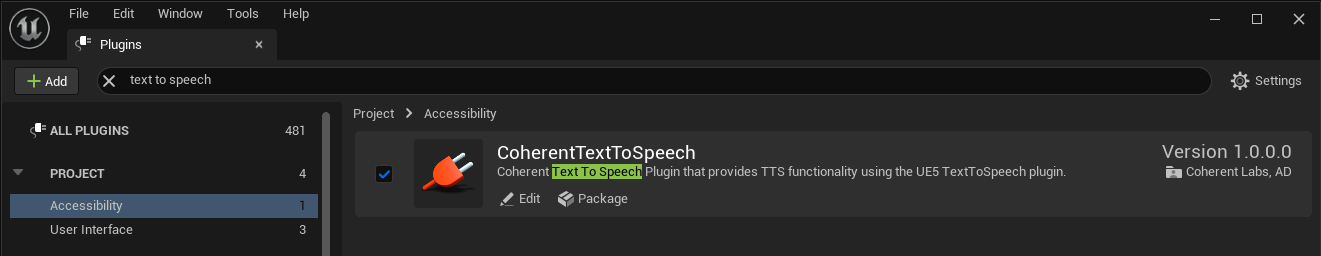

To use text-to-speech inside the UI, you will need to activate this plugin for your project or provide a custom synthesizer. Image below shows how Coherent TextToSpeech Plugin can be activated from the Unreal Editor interface by clicking on Edit and then on Plugins:

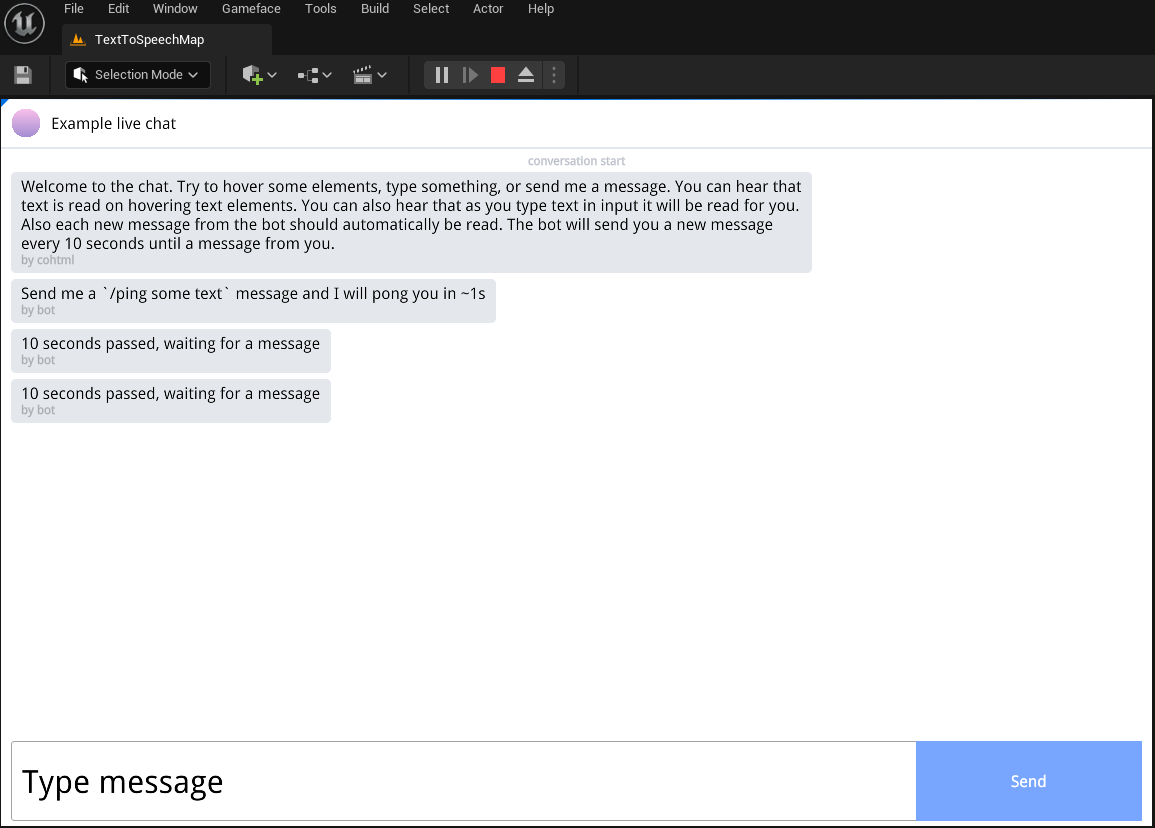

Sample

There is a TextToSpeech map for UE5.1 that shows the feature in action, loading the Chat.html from Content\uiresources\FrontendSamples\TextToSpeech\examples, inside the CoherentSample project. This sample shows a simple chat mock-up with messages that are spoken.

Inside the examples folder, you will find more UI examples on how to setup and use the ARIA JS and JS SpeechAPI libraries inside the UI to achieve text-to-speech on user interactions, such as focus change, hover, input change, and other events.