Text To Speech

Overview

To make games more accessible, Prysm provides the TextToSpeech module. It aims to help game developers integrate Text-to-speech capabilities into game UI. Any text from cohtml::View can now be verbally spoken to the end-user through the audio output. Text to speech is a functionality that makes text data be read to the audio output. It consists of two steps: synthesizing and playing audio. Synthesizing is a process of converting an arbitrary text into audio data (e.g. into WAVE audio stream). Playing audio is actually playing the generated audio stream to the audio output (this can happen either directly, or through the game engine audio system).

Here are some example use-cases of what can be easily achieved with this feature:

- When a user hovers or focuses on an element, the element content gets spoken.

- Voicing messages from a game chat.

- Reading urgent notifications instantly to the audio output.

- Reading text from an input field while a user is typing.

Modules/TextToSpeech/DefaultSynthesizers folder in the package for a target platform.How to use

In order to integrate this feature into a game you need to:

- Integrate the SpeechAPI C++ library (implement the needed interfaces, properly handle audio and worker tasks)

- Integrate the SpeechAPI JS library into the UI and use it to schedule/discard speech requests

- Optionally integrate ARIA JS library. While it’s possible to use default plugins, you can extend its functionality with your own plugins

Read this document and examine the packaged TextToSpeech sample to understand the overall flow.

Basic flow

The Text To Speech module contains 3 major components:

- SpeechAPI C++ library speech_api_cpp_lib - implements a cross-platform API for synthesizing and speaking a speech request to the audio output

- SpeechAPI JS library speech_api_js_lib - implements a queue managing system for speech requests with 4 Priority channels

- ARIA JS library - implements a plugin system that can be used to implement accessibility plugins like the one for supporting ARIA live regions and use it in a Game UI in a declarative way.

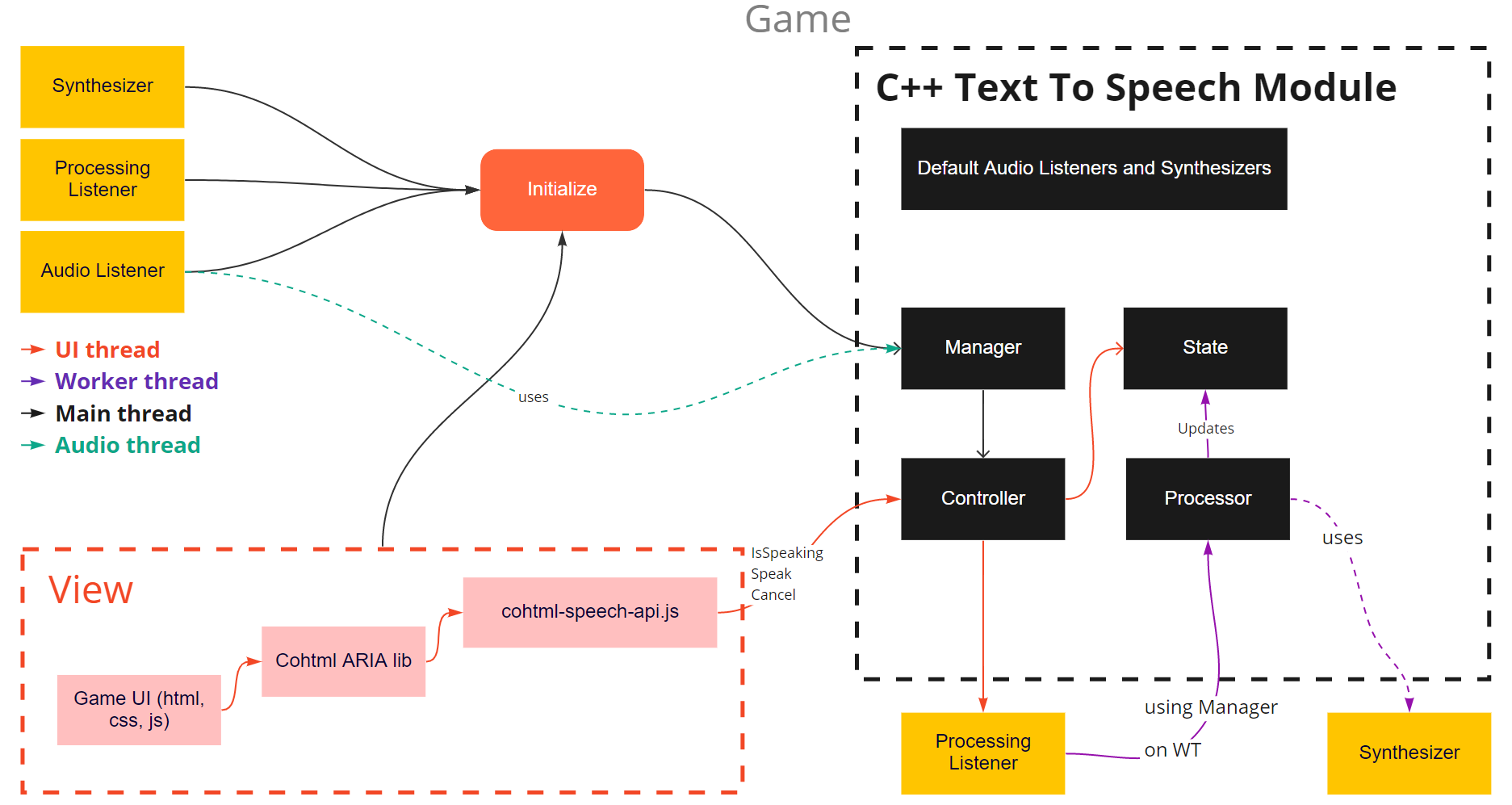

Synthesizer, but two references are used to simplify the diagram)

The basic flow looks like this:

- The game process initializes the Manager with needed listeners and synthesizers. Note that default ones can be included from DefaultSynthesizers and DefaultAudioListeners.

- When a view is ready for bindings, we enable the view to use the speech module.

- The view loads the ARIA and the speech API JS libraries.

- Plugins inside ARIA lib schedule speech requests in Speech API JS lib.

- Speech API JS lib, using binding calls, notifies the controller to schedule a new speech request

- Controller updates the State and notifies client Processor Listener about a new task available.

- The client invokes processing on a worker thread that is done by the provided synthesizer implementation.

- Once processed client worker thread asks Manager to process audio using the Processor.

- If needed, the AudioListener callbacks are called from the Processor. Note that on some platforms step 7. takes care of audio to be read right to the audio output.

- AudioListener schedules PCM/WAVE data to be played if needed in-game audio system.

- Speech API JS lib uses the controller to manage request queues and check the state of the last scheduled speech request.

For more details please refer to the code documentation in TextToSpeech module and TextToSpeech sample.

SpeechAPI C++ library

The most important entities of this system are:

Speech synthesizers manager

SpeechSynthesizerManager is the API entry point for managing synthesizing for a single View. Use it to interact with the Speech API module.

The SpeechSynthesizerController is used by the manager to handle View binding calls.

The SpeechSynthesizerProcessor is used by the manager to handle processing work like audio data processing on an audio thread or speech request processing on a worker thread.

Speech synthesizers

ISpeechSynthesizer is a cross-platform interface for text synthesizing used by the manager. Default implementations are located under DefaultSynthesizers folder.

Note that some synthesizers expect the platform environment to be initialized throughout their lifetime. This should be done by a game because the game could need to use the environment outside of synthesizers' lifetime bounds.

Currently, we support GDK and PS5 platforms. Each platform defines its own ISpeechSynthesizer. For example, for GDK platforms, XSpeechSynthesizer API is used to implement the synthesizer interface.

You can implement custom synthesizers and use them on the target platforms. One valid approach for this is to use a third-party cloud service for synthesizing, which can be done by implementing a custom synthesizer that can be used on all platforms with Internet access.

Listeners

ISpeechSynthesizerProcessingListener, ISpeechSynthesizerAudioListener - these listeners are implemented by the client to process worker and audio tasks.

SpeechAPI JS library

This library maintains request channels and speaks a single request from a single channel at a time using bindings defined in SpeechSynthesizerController (See SPEECH_EVENT_NAMES). It provides an asynchronous API for adding, discarding, and managing speech requests across 4 priority channels.

For more information please refer to the documentation in cohtml-speech-api.js.

ARIA JS library

This library uses SpeechAPI JS lib to implement accessibility plugins.

Several plugins implemented can be found in the plugins folder:

CohtmlARIAHoverReadPluginimplements speaking hovered elements witharia-labelsupport.CohtmlARIAFocusChangePluginimplements speaking focused elements contentCohtmlARIALiveRegionsPluginimplements speaking ARIA live region changes- … and more

In order to use this library, the UI needs to load library scripts and required plugins, initialize the CohtmlARIAManager with loaded plugins, and ask the ARIA manager to observe a specific DOM subtree.

<!-- Common lib files -->

<script src="../js/aria-js/cohtml-aria-utils.js"></script>

<script src="../js/aria-js/cohtml-aria-common.js"></script>

<script src="../js/aria-js/cohtml-aria-plugin.js"></script>

<!-- Include needed Plugins -->

<script src="../js/speechAPI/cohtml-speech-api.js"></script>

<script src="../js/aria-js/plugins/cohtml-aria-live-region.plugin.js"></script>

<script src="../js/aria-js/plugins/cohtml-aria-hover-read.plugin.js"></script>

<script src="../js/aria-js/plugins/cohtml-aria-focus-change.plugin.js"></script>

<!-- Entry point scripts -->

<script src="../js/aria-js/cohtml-aria-observer.js"></script>

<script src="../js/aria-js/cohtml-aria-manager.js"></script>

<script>

// Create a manager with specific plugins

const manager = new CohtmlARIAManager([

// include only needed plugins

new CohtmlARIALiveRegionsPlugin(),

new CohtmlARIAFocusChangePlugin(),

new CohtmlARIAHoverReadPlugin(),

]);

// Observe a specific document root

manager.observe(document.body);

</script>

Plugins extension

It’s possible to add custom plugins and extend the current ones. All plugins should implement CohtmlARIAPlugin from cohtml-aria-plugin.js.

Here’s an example of a simple custom plugin. For more complex plugins, check existing ones in the plugins folder.

// This plugin is an example of how to handle custom events

// Use new CustomEvent("name") and target.dispatchEvent(customEvent)

// to trigger this plugin

class CohtmlARIACustomEventPlugin extends CohtmlARIAPlugin {

onDOMEvent(event) {

if (event.type == "customuserdefined") {

console.log("Fired customuserdefined event: ", event);

}

}

get observedDomEvents() {

return ["customuserdefined"];

}

};

React support

This library and live region changes support React SPA as well, usage does not differ from the standard one - a document fragment root needs to be explicitly observed.

Samples

Several examples of how to integrate JS libraries into a game UI can be found in the Samples uiresources under TextToSpeech/examples folder.

To see examples in action, refer to the SampleTextToSpeech sample. Note that it’s packaged only on supported platforms.